Variable-ratio schedule - reinforcement does not required a fixed or set number of responses before reinforcement can be obtained. Like slot machines in the casinos. Fixed-interval schedule.

Learning Objectives

- Distinguish between reinforcement schedules

Remember, the best way to teach a person or animal a behavior is to use positive reinforcement. For example, Skinner used positive reinforcement to teach rats to press a lever in a Skinner box. At first, the rat might randomly hit the lever while exploring the box, and out would come a pellet of food. After eating the pellet, what do you think the hungry rat did next? It hit the lever again, and received another pellet of food. Each time the rat hit the lever, a pellet of food came out. When an organism receives a reinforcer each time it displays a behavior, it is called continuous reinforcement. This reinforcement schedule is the quickest way to teach someone a behavior, and it is especially effective in training a new behavior. Let’s look back at the dog that was learning to sit earlier in the module. Now, each time he sits, you give him a treat. Timing is important here: you will be most successful if you present the reinforcer immediately after he sits, so that he can make an association between the target behavior (sitting) and the consequence (getting a treat).

Once a behavior is trained, researchers and trainers often turn to another type of reinforcement schedule—partial reinforcement. In partial reinforcement, also referred to as intermittent reinforcement, the person or animal does not get reinforced every time they perform the desired behavior. There are several different types of partial reinforcement schedules (Table 1). These schedules are described as either fixed or variable, and as either interval or ratio. Fixed refers to the number of responses between reinforcements, or the amount of time between reinforcements, which is set and unchanging. Variable refers to the number of responses or amount of time between reinforcements, which varies or changes. Interval means the schedule is based on the time between reinforcements, and ratio means the schedule is based on the number of responses between reinforcements.| Reinforcement Schedule | Description | Result | Example |

|---|---|---|---|

| Fixed interval | Reinforcement is delivered at predictable time intervals (e.g., after 5, 10, 15, and 20 minutes). | Moderate response rate with significant pauses after reinforcement | Hospital patient uses patient-controlled, doctor-timed pain relief |

| Variable interval | Reinforcement is delivered at unpredictable time intervals (e.g., after 5, 7, 10, and 20 minutes). | Moderate yet steady response rate | Checking Facebook |

| Fixed ratio | Reinforcement is delivered after a predictable number of responses (e.g., after 2, 4, 6, and 8 responses). | High response rate with pauses after reinforcement | Piecework—factory worker getting paid for every x number of items manufactured |

| Variable ratio | Reinforcement is delivered after an unpredictable number of responses (e.g., after 1, 4, 5, and 9 responses). | High and steady response rate | Gambling |

Now let’s combine these four terms. A fixed interval reinforcement schedule is when behavior is rewarded after a set amount of time. For example, June undergoes major surgery in a hospital. During recovery, she is expected to experience pain and will require prescription medications for pain relief. June is given an IV drip with a patient-controlled painkiller. Her doctor sets a limit: one dose per hour. June pushes a button when pain becomes difficult, and she receives a dose of medication. Since the reward (pain relief) only occurs on a fixed interval, there is no point in exhibiting the behavior when it will not be rewarded.

With a variable interval reinforcement schedule, the person or animal gets the reinforcement based on varying amounts of time, which are unpredictable. Say that Manuel is the manager at a fast-food restaurant. Every once in a while someone from the quality control division comes to Manuel’s restaurant. If the restaurant is clean and the service is fast, everyone on that shift earns a $20 bonus. Manuel never knows when the quality control person will show up, so he always tries to keep the restaurant clean and ensures that his employees provide prompt and courteous service. His productivity regarding prompt service and keeping a clean restaurant are steady because he wants his crew to earn the bonus.

With a fixed ratio reinforcement schedule, there are a set number of responses that must occur before the behavior is rewarded. Carla sells glasses at an eyeglass store, and she earns a commission every time she sells a pair of glasses. She always tries to sell people more pairs of glasses, including prescription sunglasses or a backup pair, so she can increase her commission. She does not care if the person really needs the prescription sunglasses, Carla just wants her bonus. The quality of what Carla sells does not matter because her commission is not based on quality; it’s only based on the number of pairs sold. This distinction in the quality of performance can help determine which reinforcement method is most appropriate for a particular situation. Fixed ratios are better suited to optimize the quantity of output, whereas a fixed interval, in which the reward is not quantity based, can lead to a higher quality of output.

In a variable ratio reinforcement schedule, the number of responses needed for a reward varies. This is the most powerful partial reinforcement schedule. An example of the variable ratio reinforcement schedule is gambling. Imagine that Sarah—generally a smart, thrifty woman—visits Las Vegas for the first time. She is not a gambler, but out of curiosity she puts a quarter into the slot machine, and then another, and another. Nothing happens. Two dollars in quarters later, her curiosity is fading, and she is just about to quit. But then, the machine lights up, bells go off, and Sarah gets 50 quarters back. That’s more like it! Sarah gets back to inserting quarters with renewed interest, and a few minutes later she has used up all her gains and is $10 in the hole. Now might be a sensible time to quit. And yet, she keeps putting money into the slot machine because she never knows when the next reinforcement is coming. She keeps thinking that with the next quarter she could win $50, or $100, or even more. Because the reinforcement schedule in most types of gambling has a variable ratio schedule, people keep trying and hoping that the next time they will win big. This is one of the reasons that gambling is so addictive—and so resistant to extinction.

Watch It

Review the schedules of reinforcement in the following video.

You can view the transcript for “Learning: Schedules of Reinforcement” here (opens in new window).

In operant conditioning, extinction of a reinforced behavior occurs at some point after reinforcement stops, and the speed at which this happens depends on the reinforcement schedule. In a variable ratio schedule, the point of extinction comes very slowly, as described above. But in the other reinforcement schedules, extinction may come quickly. For example, if June presses the button for the pain relief medication before the allotted time her doctor has approved, no medication is administered. She is on a fixed interval reinforcement schedule (dosed hourly), so extinction occurs quickly when reinforcement doesn’t come at the expected time. Among the reinforcement schedules, variable ratio is the most productive and the most resistant to extinction. Fixed interval is the least productive and the easiest to extinguish (Figure 1).

Connect the Concepts: Gambling and the Brain

Skinner (1953) stated, “If the gambling establishment cannot persuade a patron to turn over money with no return, it may achieve the same effect by returning part of the patron’s money on a variable-ratio schedule” (p. 397).

Figure 2. Some research suggests that pathological gamblers use gambling to compensate for abnormally low levels of the hormone norepinephrine, which is associated with stress and is secreted in moments of arousal and thrill. (credit: Ted Murphy)

Skinner uses gambling as an example of the power of the variable-ratio reinforcement schedule for maintaining behavior even during long periods without any reinforcement. In fact, Skinner was so confident in his knowledge of gambling addiction that he even claimed he could turn a pigeon into a pathological gambler (“Skinner’s Utopia,” 1971). It is indeed true that variable-ratio schedules keep behavior quite persistent—just imagine the frequency of a child’s tantrums if a parent gives in even once to the behavior. The occasional reward makes it almost impossible to stop the behavior.

Recent research in rats has failed to support Skinner’s idea that training on variable-ratio schedules alone causes pathological gambling (Laskowski et al., 2019). However, other research suggests that gambling does seem to work on the brain in the same way as most addictive drugs, and so there may be some combination of brain chemistry and reinforcement schedule that could lead to problem gambling (Figure 6.14). Specifically, modern research shows the connection between gambling and the activation of the reward centers of the brain that use the neurotransmitter (brain chemical) dopamine (Murch & Clark, 2016). Interestingly, gamblers don’t even have to win to experience the “rush” of dopamine in the brain. “Near misses,” or almost winning but not actually winning, also have been shown to increase activity in the ventral striatum and other brain reward centers that use dopamine (Chase & Clark, 2010). These brain effects are almost identical to those produced by addictive drugs like cocaine and heroin (Murch & Clark, 2016). Based on the neuroscientific evidence showing these similarities, the DSM-5 now considers gambling an addiction, while earlier versions of the DSM classified gambling as an impulse control disorder.

In addition to dopamine, gambling also appears to involve other neurotransmitters, including norepinephrine and serotonin (Potenza, 2013). Norepinephrine is secreted when a person feels stress, arousal, or thrill. It may be that pathological gamblers use gambling to increase their levels of this neurotransmitter. Deficiencies in serotonin might also contribute to compulsive behavior, including a gambling addiction (Potenza, 2013).

It may be that pathological gamblers’ brains are different than those of other people, and perhaps this difference may somehow have led to their gambling addiction, as these studies seem to suggest. However, it is very difficult to ascertain the cause because it is impossible to conduct a true experiment (it would be unethical to try to turn randomly assigned participants into problem gamblers). Therefore, it may be that causation actually moves in the opposite direction—perhaps the act of gambling somehow changes neurotransmitter levels in some gamblers’ brains. It also is possible that some overlooked factor, or confounding variable, played a role in both the gambling addiction and the differences in brain chemistry.

Glossary

Slot Machines Operate On A __ Schedule Of Reinforcement Chart

Contribute!

Learning Objectives

- Outline the principles of operant conditioning.

- Explain how learning can be shaped through the use of reinforcement schedules and secondary reinforcers.

In classical conditioning the organism learns to associate new stimuli with natural biological responses such as salivation or fear. The organism does not learn something new but rather begins to perform an existing behaviour in the presence of a new signal. Operant conditioning, on the other hand, is learning that occurs based on the consequences of behaviour and can involve the learning of new actions. Operant conditioning occurs when a dog rolls over on command because it has been praised for doing so in the past, when a schoolroom bully threatens his classmates because doing so allows him to get his way, and when a child gets good grades because her parents threaten to punish her if she doesn’t. In operant conditioning the organism learns from the consequences of its own actions.

How Reinforcement and Punishment Influence Behaviour: The Research of Thorndike and Skinner

Psychologist Edward L. Thorndike (1874-1949) was the first scientist to systematically study operant conditioning. In his research Thorndike (1898) observed cats who had been placed in a “puzzle box” from which they tried to escape (“Video Clip: Thorndike’s Puzzle Box”). At first the cats scratched, bit, and swatted haphazardly, without any idea of how to get out. But eventually, and accidentally, they pressed the lever that opened the door and exited to their prize, a scrap of fish. The next time the cat was constrained within the box, it attempted fewer of the ineffective responses before carrying out the successful escape, and after several trials the cat learned to almost immediately make the correct response.

Observing these changes in the cats’ behaviour led Thorndike to develop his law of effect, the principle that responses that create a typically pleasant outcome in a particular situation are more likely to occur again in a similar situation, whereas responses that produce a typically unpleasant outcome are less likely to occur again in the situation (Thorndike, 1911). The essence of the law of effect is that successful responses, because they are pleasurable, are “stamped in” by experience and thus occur more frequently. Unsuccessful responses, which produce unpleasant experiences, are “stamped out” and subsequently occur less frequently.

Video:Thorndike’s Puzzle Box [http://www.youtube.com/watch?v=BDujDOLre-8]. When Thorndike placed his cats in a puzzle box, he found that they learned to engage in the important escape behaviour faster after each trial. Thorndike described the learning that follows reinforcement in terms of the law of effect.

The influential behavioural psychologist B. F. Skinner (1904-1990) expanded on Thorndike’s ideas to develop a more complete set of principles to explain operant conditioning. Skinner created specially designed environments known as operant chambers (usually called Skinner boxes) to systematically study learning. A Skinner box (operant chamber) is a structure that is big enough to fit a rodent or bird and that contains a bar or key that the organism can press or peck to release food or water. It also contains a device to record the animal’s responses (Figure 10.5).

The most basic of Skinner’s experiments was quite similar to Thorndike’s research with cats. A rat placed in the chamber reacted as one might expect, scurrying about the box and sniffing and clawing at the floor and walls. Eventually the rat chanced upon a lever, which it pressed to release pellets of food. The next time around, the rat took a little less time to press the lever, and on successive trials, the time it took to press the lever became shorter and shorter. Soon the rat was pressing the lever as fast as it could eat the food that appeared. As predicted by the law of effect, the rat had learned to repeat the action that brought about the food and cease the actions that did not.

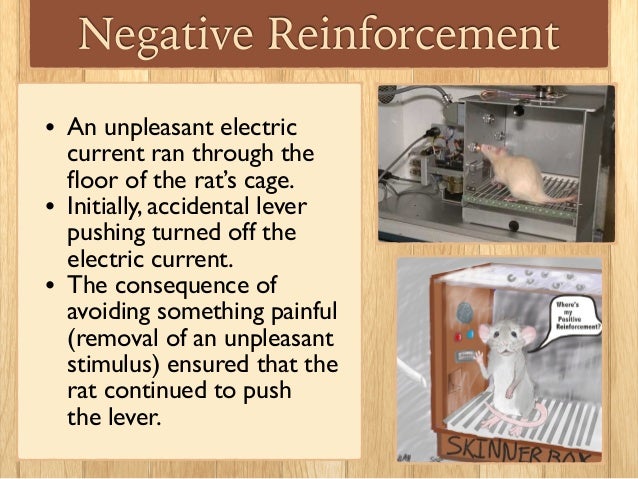

Skinner studied, in detail, how animals changed their behaviour through reinforcement and punishment, and he developed terms that explained the processes of operant learning (Table 10.1, “How Positive and Negative Reinforcement and Punishment Influence Behaviour”). Skinner used the term reinforcer to refer to any event that strengthens or increases the likelihood of a behaviour, and the term punisher to refer to any event that weakens or decreases the likelihood of a behaviour. And he used the terms positive and negative to refer to whether a reinforcement was presented or removed, respectively. Thus, positive reinforcement strengthens a response by presenting something pleasant after the response, and negative reinforcementstrengthens a response by reducing or removing something unpleasant. For example, giving a child praise for completing his homework represents positive reinforcement, whereas taking Aspirin to reduce the pain of a headache represents negative reinforcement. In both cases, the reinforcement makes it more likely that behaviour will occur again in the future.

| Table 10.1 How Positive and Negative Reinforcement and Punishment Influence Behaviour. | |||

| Operant conditioning term | Description | Outcome | Example |

|---|---|---|---|

| Positive reinforcement | Add or increase a pleasant stimulus | Behaviour is strengthened | Giving a student a prize after he or she gets an A on a test |

| Negative reinforcement | Reduce or remove an unpleasant stimulus | Behaviour is strengthened | Taking painkillers that eliminate pain increases the likelihood that you will take painkillers again |

| Positive punishment | Present or add an unpleasant stimulus | Behaviour is weakened | Giving a student extra homework after he or she misbehaves in class |

| Negative punishment | Reduce or remove a pleasant stimulus | Behaviour is weakened | Taking away a teen’s computer after he or she misses curfew |

Reinforcement, either positive or negative, works by increasing the likelihood of a behaviour. Punishment, on the other hand, refers to any event that weakens or reduces the likelihood of a behaviour. Positive punishmentweakens a response by presenting something unpleasant after the response, whereas negative punishmentweakens a response by reducing or removing something pleasant. A child who is grounded after fighting with a sibling (positive punishment) or who loses out on the opportunity to go to recess after getting a poor grade (negative punishment) is less likely to repeat these behaviours.

Although the distinction between reinforcement (which increases behaviour) and punishment (which decreases it) is usually clear, in some cases it is difficult to determine whether a reinforcer is positive or negative. On a hot day a cool breeze could be seen as a positive reinforcer (because it brings in cool air) or a negative reinforcer (because it removes hot air). In other cases, reinforcement can be both positive and negative. One may smoke a cigarette both because it brings pleasure (positive reinforcement) and because it eliminates the craving for nicotine (negative reinforcement).

It is also important to note that reinforcement and punishment are not simply opposites. The use of positive reinforcement in changing behaviour is almost always more effective than using punishment. This is because positive reinforcement makes the person or animal feel better, helping create a positive relationship with the person providing the reinforcement. Types of positive reinforcement that are effective in everyday life include verbal praise or approval, the awarding of status or prestige, and direct financial payment. Punishment, on the other hand, is more likely to create only temporary changes in behaviour because it is based on coercion and typically creates a negative and adversarial relationship with the person providing the reinforcement. When the person who provides the punishment leaves the situation, the unwanted behaviour is likely to return.

Creating Complex Behaviours through Operant Conditioning

Perhaps you remember watching a movie or being at a show in which an animal — maybe a dog, a horse, or a dolphin — did some pretty amazing things. The trainer gave a command and the dolphin swam to the bottom of the pool, picked up a ring on its nose, jumped out of the water through a hoop in the air, dived again to the bottom of the pool, picked up another ring, and then took both of the rings to the trainer at the edge of the pool. The animal was trained to do the trick, and the principles of operant conditioning were used to train it. But these complex behaviours are a far cry from the simple stimulus-response relationships that we have considered thus far. How can reinforcement be used to create complex behaviours such as these?

One way to expand the use of operant learning is to modify the schedule on which the reinforcement is applied. To this point we have only discussed a continuous reinforcement schedule, in which the desired response is reinforced every time it occurs; whenever the dog rolls over, for instance, it gets a biscuit. Continuous reinforcement results in relatively fast learning but also rapid extinction of the desired behaviour once the reinforcer disappears. The problem is that because the organism is used to receiving the reinforcement after every behaviour, the responder may give up quickly when it doesn’t appear.

Most real-world reinforcers are not continuous; they occur on a partial (or intermittent) reinforcement schedule — a schedule in which the responses are sometimes reinforced and sometimes not. In comparison to continuous reinforcement, partial reinforcement schedules lead to slower initial learning, but they also lead to greater resistance to extinction. Because the reinforcement does not appear after every behaviour, it takes longer for the learner to determine that the reward is no longer coming, and thus extinction is slower. The four types of partial reinforcement schedules are summarized in Table 10.2, “Reinforcement Schedules.”

| Table 10.2 Reinforcement Schedules. | ||

| Reinforcement schedule | Explanation | Real-world example |

|---|---|---|

| Fixed-ratio | Behaviour is reinforced after a specific number of responses. | Factory workers who are paid according to the number of products they produce |

| Variable-ratio | Behaviour is reinforced after an average, but unpredictable, number of responses. | Payoffs from slot machines and other games of chance |

| Fixed-interval | Behaviour is reinforced for the first response after a specific amount of time has passed. | People who earn a monthly salary |

| Variable-interval | Behaviour is reinforced for the first response after an average, but unpredictable, amount of time has passed. | Person who checks email for messages |

Partial reinforcement schedules are determined by whether the reinforcement is presented on the basis of the time that elapses between reinforcement (interval) or on the basis of the number of responses that the organism engages in (ratio), and by whether the reinforcement occurs on a regular (fixed) or unpredictable (variable) schedule. In a fixed-interval schedule, reinforcement occurs for the first response made after a specific amount of time has passed. For instance, on a one-minute fixed-interval schedule the animal receives a reinforcement every minute, assuming it engages in the behaviour at least once during the minute. As you can see in Figure 10.6, “Examples of Response Patterns by Animals Trained under Different Partial Reinforcement Schedules,” animals under fixed-interval schedules tend to slow down their responding immediately after the reinforcement but then increase the behaviour again as the time of the next reinforcement gets closer. (Most students study for exams the same way.) In a variable-interval schedule, the reinforcers appear on an interval schedule, but the timing is varied around the average interval, making the actual appearance of the reinforcer unpredictable. An example might be checking your email: you are reinforced by receiving messages that come, on average, say, every 30 minutes, but the reinforcement occurs only at random times. Interval reinforcement schedules tend to produce slow and steady rates of responding.

In a fixed-ratio schedule, a behaviour is reinforced after a specific number of responses. For instance, a rat’s behaviour may be reinforced after it has pressed a key 20 times, or a salesperson may receive a bonus after he or she has sold 10 products. As you can see in Figure 10.6, “Examples of Response Patterns by Animals Trained under Different Partial Reinforcement Schedules,” once the organism has learned to act in accordance with the fixed-ratio schedule, it will pause only briefly when reinforcement occurs before returning to a high level of responsiveness. A variable-ratio scheduleprovides reinforcers after a specific but average number of responses. Winning money from slot machines or on a lottery ticket is an example of reinforcement that occurs on a variable-ratio schedule. For instance, a slot machine (see Figure 10.7, “Slot Machine”) may be programmed to provide a win every 20 times the user pulls the handle, on average. Ratio schedules tend to produce high rates of responding because reinforcement increases as the number of responses increases.

Complex behaviours are also created through shaping, the process of guiding an organism’s behaviour to the desired outcome through the use of successive approximation to a final desired behaviour. Skinner made extensive use of this procedure in his boxes. For instance, he could train a rat to press a bar two times to receive food, by first providing food when the animal moved near the bar. When that behaviour had been learned, Skinner would begin to provide food only when the rat touched the bar. Further shaping limited the reinforcement to only when the rat pressed the bar, to when it pressed the bar and touched it a second time, and finally to only when it pressed the bar twice. Although it can take a long time, in this way operant conditioning can create chains of behaviours that are reinforced only when they are completed.

Reinforcing animals if they correctly discriminate between similar stimuli allows scientists to test the animals’ ability to learn, and the discriminations that they can make are sometimes remarkable. Pigeons have been trained to distinguish between images of Charlie Brown and the other Peanuts characters (Cerella, 1980), and between different styles of music and art (Porter & Neuringer, 1984; Watanabe, Sakamoto & Wakita, 1995).

Behaviours can also be trained through the use of secondary reinforcers. Whereas a primary reinforcer includes stimuli that are naturally preferred or enjoyed by the organism, such as food, water, and relief from pain, a secondary reinforcer (sometimes called conditioned reinforcer) is a neutral event that has become associated with a primary reinforcer through classical conditioning. An example of a secondary reinforcer would be the whistle given by an animal trainer, which has been associated over time with the primary reinforcer, food. An example of an everyday secondary reinforcer is money. We enjoy having money, not so much for the stimulus itself, but rather for the primary reinforcers (the things that money can buy) with which it is associated.

Key Takeaways

- Edward Thorndike developed the law of effect: the principle that responses that create a typically pleasant outcome in a particular situation are more likely to occur again in a similar situation, whereas responses that produce a typically unpleasant outcome are less likely to occur again in the situation.

- B. F. Skinner expanded on Thorndike’s ideas to develop a set of principles to explain operant conditioning.

- Positive reinforcement strengthens a response by presenting something that is typically pleasant after the response, whereas negative reinforcement strengthens a response by reducing or removing something that is typically unpleasant.

- Positive punishment weakens a response by presenting something typically unpleasant after the response, whereas negative punishment weakens a response by reducing or removing something that is typically pleasant.

- Reinforcement may be either partial or continuous. Partial reinforcement schedules are determined by whether the reinforcement is presented on the basis of the time that elapses between reinforcements (interval) or on the basis of the number of responses that the organism engages in (ratio), and by whether the reinforcement occurs on a regular (fixed) or unpredictable (variable) schedule.

- Complex behaviours may be created through shaping, the process of guiding an organism’s behaviour to the desired outcome through the use of successive approximation to a final desired behaviour.

Exercises and Critical Thinking

- Give an example from daily life of each of the following: positive reinforcement, negative reinforcement, positive punishment, negative punishment.

- Consider the reinforcement techniques that you might use to train a dog to catch and retrieve a Frisbee that you throw to it.

- Watch the following two videos from current television shows. Can you determine which learning procedures are being demonstrated?

- The Office: http://www.break.com/usercontent/2009/11/the-office-altoid- experiment-1499823

- The Big Bang Theory [YouTube]: http://www.youtube.com/watch?v=JA96Fba-WHk

Image Attributions

Figure 10.5: “Skinner box” (http://en.wikipedia.org/wiki/File:Skinner_box_photo_02.jpg) is licensed under the CC BY SA 3.0 license (http://creativecommons.org/licenses/by-sa/3.0/deed.en). “Skinner box scheme” by Andreas1 (http://en.wikipedia.org/wiki/File:Skinner_box_scheme_01.png) is licensed under the CC BY SA 3.0 license (http://creativecommons.org/licenses/by-sa/3.0/deed.en)

Figure 10.6: Adapted from Kassin (2003).

Figure 10.7: “Slot Machines in the Hard Rock Casino” by Ted Murpy (http://commons.wikimedia.org/wiki/File:HardRockCasinoSlotMachines.jpg) is licensed under CC BY 2.0. (http://creativecommons.org/licenses/by/2.0/deed.en).

References

Cerella, J. (1980). The pigeon’s analysis of pictures. Pattern Recognition, 12, 1–6.

Kassin, S. (2003). Essentials of psychology. Upper Saddle River, NJ: Prentice Hall. Retrieved from Essentials of Psychology Prentice Hall Companion Website: http://wps.prenhall.com/hss_kassin_essentials_1/15/3933/1006917.cw/index.html

Porter, D., & Neuringer, A. (1984). Music discriminations by pigeons. Journal of Experimental Psychology: Animal Behavior Processes, 10(2), 138–148.

Thorndike, E. L. (1898). Animal intelligence: An experimental study of the associative processes in animals. Washington, DC: American Psychological Association.

Thorndike, E. L. (1911). Animal intelligence: Experimental studies. New York, NY: Macmillan. Retrieved from http://www.archive.org/details/animalintelligen00thor

Watanabe, S., Sakamoto, J., & Wakita, M. (1995). Pigeons’ discrimination of painting by Monet and Picasso. Journal of the Experimental Analysis of Behaviour, 63(2), 165–174.